AI has moved beyond theoretical research to become a core technology used across services and industries. We’re now familiar with AI that generates sentences, understands images, and converses using natural voice. But for AI to truly gain widespread adoption, one critical condition must be met: fast and stable performance.

For example, if a large language model responds slower than reading speed, users feel frustrated almost immediately. To deliver natural-sounding responses without noticeable latency, semiconductors capable of processing large-scale computations at high speed are essential.

As AI models grow larger and more complex, computational workloads increase exponentially. This makes AI semiconductors—the hardware that supports AI performance and efficiency—more important than ever.

What are AI semiconductors?

AI semiconductors are chips designed to process AI computations quickly and efficiently. For AI to generate words faster than people can read them, it needs to produce roughly 10 to 100 words per second. Achieving this speed requires rapidly loading vast amounts of data and performing large-scale calculations. Memory access speed matters just as much as raw computational power. Think of AI semiconductors as AI-optimized accelerators built to handle this kind of data-intensive, high-speed computation.

Types of AI semiconductors

At their core, AI models rely heavily on matrix operations—executing a massive number of calculations simultaneously. As models grow, the volume of these operations scales accordingly.

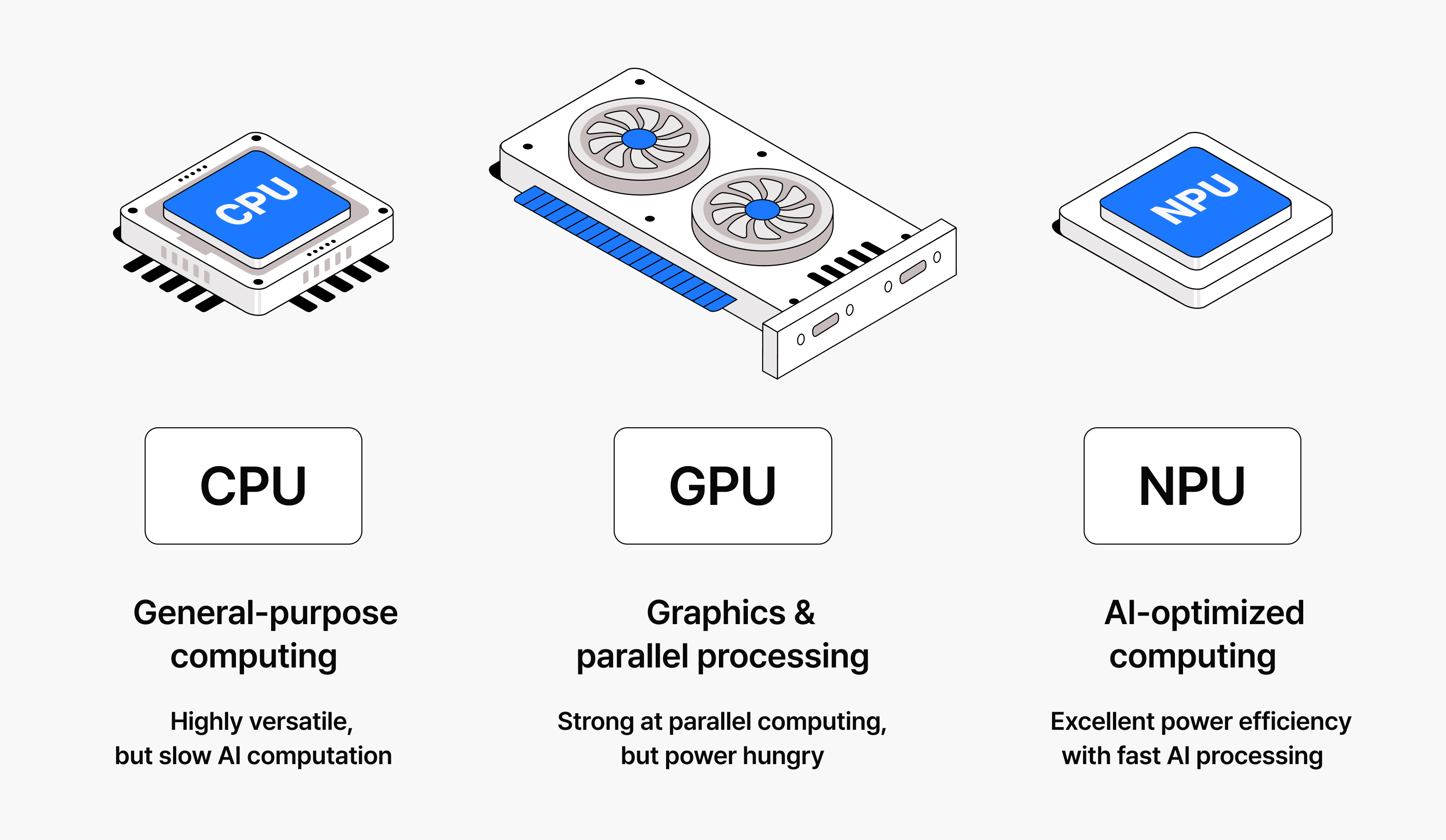

This is where traditional CPU-based architectures reach their limits. CPUs are designed for flexibility across various tasks, typically built on 1 to 8 cores optimized for serial processing. They complete one operation before moving to the next, which creates bottlenecks when AI workloads demand massive parallel computation. In contrast, GPUs and NPUs are architecturally optimized for large-scale matrix operations, making them far more efficient for AI tasks.

GPU

A GPU (graphics processing unit) processes large amounts of data in bulk through parallel processing. With hundreds to thousands of cores, it can execute similarly structured operations simultaneously, giving it a clear advantage for high-volume data processing.

GPUs were originally designed to assist CPUs with multimedia tasks like gaming and video editing. But as AI became widespread, they evolved from graphics processors into essential computing resources for training large-scale AI models. While GPUs lack the fine-tuned precision of CPUs, their vastly superior computational speed makes them ideal for the repetitive matrix operations common in AI training with large datasets.

That said, GPUs weren’t designed specifically for AI from the start. While highly effective for processing training data, the inference stage—where trained models need to generate results quickly in production—calls for more specialized optimization tailored to AI algorithms.

NPU

This need is what drove the development of NPUs (neural processing units). NPUs are AI-optimized semiconductors that combine parallel processing capabilities with architectures designed around neural network structures and AI computational flows. They’re specifically engineered for low latency and high power efficiency during inference. This makes them ideal for on-device AI, running directly on edge devices like smartphones, wearables, and IoT devices without an internet connection.

Growth of the AI semiconductor market

The AI semiconductor market is expanding at a remarkable pace. Tasks that CPUs once handled—coding assistance, complex logical reasoning, and more—are increasingly being offloaded to AI-powered processing. This marks a fundamental shift away from traditionally CPU-centric computing architectures toward AI semiconductors.

Beyond these core tasks, AI is becoming central to communications, video processing, and sensor data interpretation. As a result, AI is breaking down the boundaries of the conventional semiconductor market and reshaping the industry as a whole. It’s no surprise that semiconductor companies are now directing their focus toward AI.

Need for AI efficiency

As AI expands into more areas and models grow more advanced, the demand for data processing and computation increases significantly. This translates directly into higher infrastructure costs and power consumption—making AI efficiency the next critical challenge.

model compression

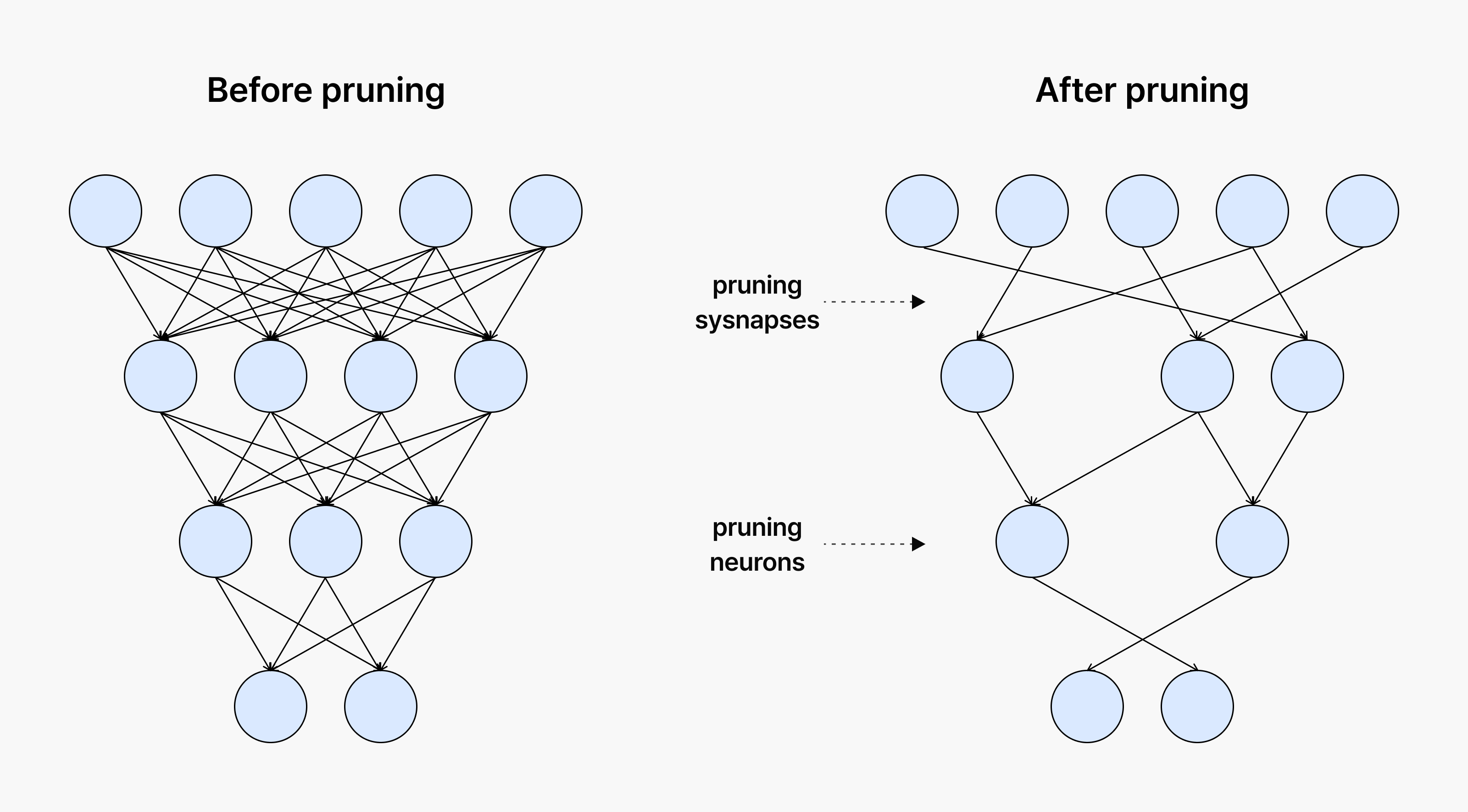

Model compression is gaining attention as a key to improving AI efficiency. Just as video compression technologies like H.264 and MPEG made video streaming affordable, AI models can be compressed in similar ways.

How are AI models compressed? The key lies in redundancy. During training, AI models use large numbers of parameters to reliably learn various data patterns. Once training is complete, however, many parameters converge to nearly identical results, adding unnecessary computational load. By removing this redundancy—essential during training but not after—efficiency improves significantly in real-world deployment.

According to MIT’s “lottery ticket hypothesis,” large-scale AI models already contain hidden minimal computational paths, or “winning tickets.” Identifying these tickets makes it possible to compress significant portions of the model. But lightweight algorithms don’t simply mean reducing file size. The real goal is reducing the power consumption and memory access required to run services.

(Source: Han, Song, et al., “Learning both weights and connections for efficient neural networks,” Advances in Neural Information Processing Systems, 2015.)

Compression-friendly AI semiconductors

Compressed computational structures, however, don’t work well with existing GPU architectures. GPUs are most efficient when thousands of cores simultaneously perform similar operations, but compressed models have uneven computational loads and irregular patterns. This can create bottlenecks where some cores sit idle. In many cases, compression benefits don’t just fail to materialize—performance can actually become slower after compression.

This is why semiconductors with architectures optimized for compression are so important. It’s not just about changing the algorithms: hardware must be designed from the ground up to run compressed operations efficiently. Only then can both lightweight and speed be achieved together. TEAM NAVER’s research on compression-friendly AI semiconductors—work that could shape the future market—is already well underway.

Where AI semiconductors will go next

The evolution of AI semiconductors ultimately follows the trajectory of AI model development. One concept emerging as the next stage of AI is agentic AI—autonomous AI that makes independent judgments, plans accordingly, and carries them out, rather than simply responding to requests.

In an agentic AI environment, processing a single problem can require various types of computation simultaneously. GPUs are optimized for parallel processing of similar operations in bulk, but agentic AI must handle different requests from different users. In other words, there will often be situations where heterogeneous operations—language, image, voice, and code—must be performed at the same time.

Consider a scenario where:

- User A asks to summarize a lengthy document

- User B wants to analyze an image

- User C starts a voice conversation

GPUs excel at batch processing of similar operations, but they struggle to respond efficiently in environments where different computation patterns occur simultaneously. Ultimately, AI semiconductors need to evolve toward designs with greater flexibility and adaptability.

Conclusion

However rapidly AI technology advances, it requires a solid foundation—and that foundation is semiconductors. AI semiconductors are no longer just hardware components; they’ve become a core strategic technology that could shape the future of society.

For South Korea, which has traditionally shown strength in semiconductor and manufacturing, AI semiconductors represent more than technological competition. They mark an important turning point—one that opens doors to new growth opportunities. AI semiconductors and the ecosystem surrounding them will drive the next era shaped by AI, playing an integral role in making that transformation a reality.

Learn more in KBS N Series, AI Topia, episode 7

You can see these concepts in action in the seventh episode of KBS N Series’ AI Topia, “AI semiconductors: Game changer in the era of AI.” Lee Dongsoo from AI Computing Solution at NAVER Cloud breaks down these ideas with clear examples and helpful context. It’s a great way to get a fuller picture of the topics covered in this post.