Understanding the benefits and challenges of deploying conversational AI leveraging large language

models for public health intervention

Best Paper Award at ACM CHI 2023

Kim Young-Ho, Research Scientist (Research Group Leader in HCI at NAVER AI Lab and NAVER Cloud)

Overview

We introduce here a paper published by NAVER that earned a Best Paper Award at ACM CHI 2023, a leading conference in human-computer interaction (HCI) research.

Understanding the Benefits and Challenges of Deploying Conversational AI Leveraging Large Language Models for Public Health Intervention

Jo Eunkyung, Research Intern at NAVER AI Lab (PhD Candidate, University of California, Irvine)

Daniel A. Epstein (Assistant Professor, University of California, Irvine)

Jung Hyunhoon (NAVER Cloud)

Kim Young-Ho (NAVER AI Lab, NAVER Cloud)

Introduction

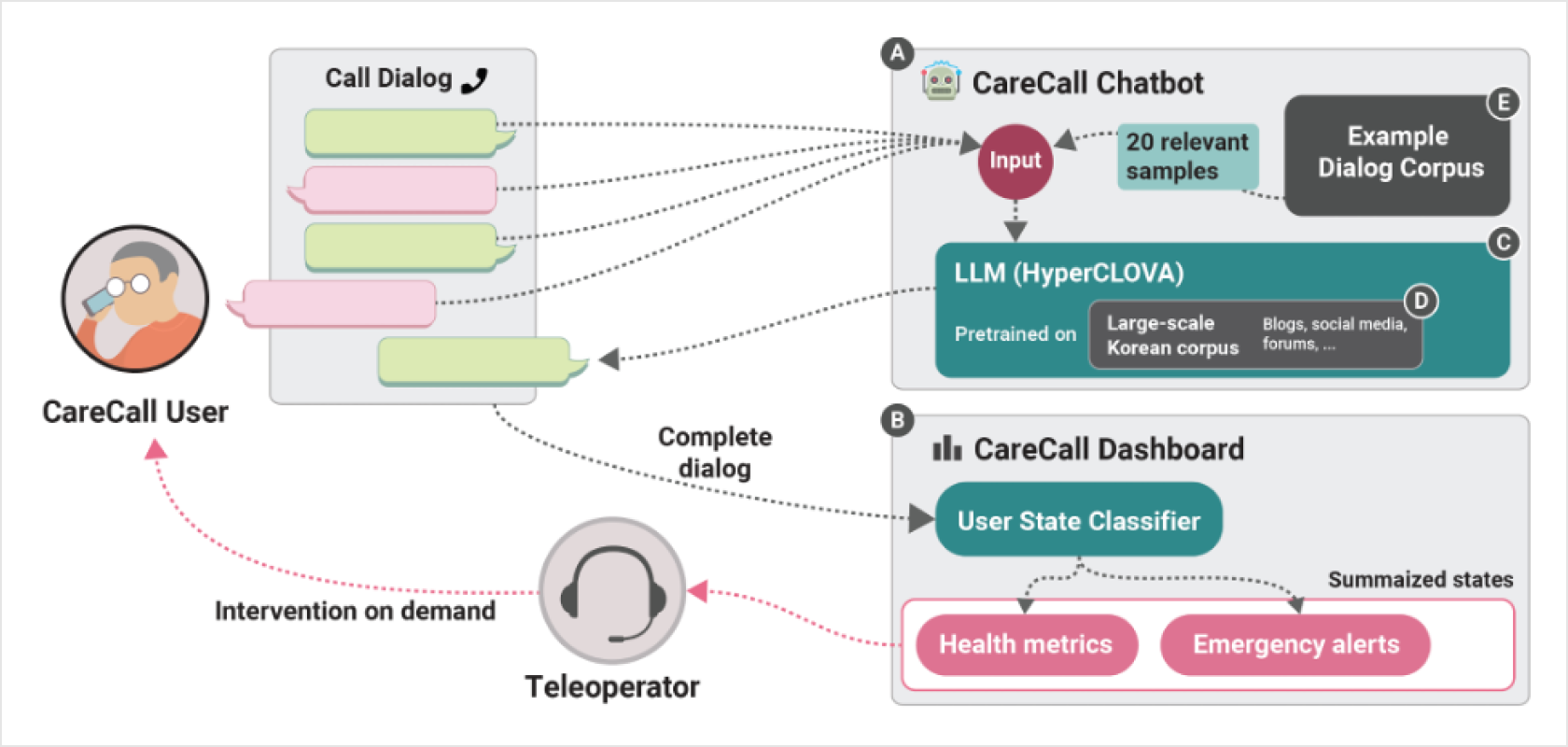

LLM-driven chatbots are now commonplace and are making inroads in the IT market. A year before OpenAI released ChatGPT near the end of 2022, NAVER had already launched CLOVA CareCall powered by NAVER’s LLM, HyperCLOVA. For this groundbreaking project, we worked with local governments to provide social care to individuals living alone through AI check-up phone calls. The following figure gives you an overall picture of how CLOVA CareCall works. First, the LLM-driven chatbot checks in on the user through twice-weekly calls. The social worker is then granted access to the call recording and analysis to respond appropriately to different situations and emergencies.

Such community-wide systems and policies for providing emotional support and improving physical health are called public health interventions. We recognized CLOVA CareCall as a rare and valuable example of an LLM being used for public health intervention, and this realization inspired us to explore CLOVA CareCall and its implications through further research.

Why is CLOVA CareCall getting the spotlight in the HCI field?

Many other chatbots predated CLOVA CareCall as a public health intervention tool. But in terms of user experience, there is a crucial difference between the two separate technologies underpinning LLM-based chatbots and the rules- or scenario-based chatbots. Whereas traditional chatbots are designed to converse on pre-defined topics and are ill-prepared to answer unexpected questions, LLM-based chatbots are trained on a broad range of situations and interact with users more naturally, making the users feel they are being listened to.

This doesn’t mean that LLM-driven chatbots are without challenges of their own. Because LLMs are prompted to generate responses instead of relying on pre-built scenarios, it is often difficult to predict, let alone control, how the conversations will take place. Deploying such chatbots to a vulnerable demographic group of middle-aged and older adults living by themselves requires ensuring reliability in advance and reaching a consensus with municipal authorities. Due to these difficulties, LLM-based chatbots introduced through public-private partnerships were unprecedented in the public health field before CLOVA CareCall in 2022. This is why, for researchers studying human-computer interaction and interested in how humans think and behave around this emerging technology, CLOVA CareCall offers much food for thought. The scope of our research goes beyond user interaction because we also wanted to shed light on the conflicting interests between the CLOVA CareCall development team and the municipal authorities looking to implement the chatbot, which we summarize here.

Multi-stakeholder interviews

For this research, we conducted interviews with three groups of stakeholders.

1. Interview with users: five seniors living alone who received regular check-up calls

2. Interview with teleoperators: five social workers hired by local governments who read the call transcripts and took care of the elderly

3. Interview with developers: ten members of NAVER’s CLOVA CareCall development team, including machine learning engineers, UX designers, business managers, and a quality manager

In addition, we had an opportunity to observe focus group workshop sessions held by the Seoul Metropolitan Government, where we could gather indirect feedback from 14 participants who had been using CLOVA CareCall.

Benefits of leveraging an LLM-driven chatbot in public health intervention

From our interviews with users and teleoperators, we could better understand how an LLM-driven chatbot could serve as a useful public health intervention.

– Social workers can offload their work burden while gaining a holistic understanding of each care recipient.

Caregivers said in their interviews that they could gain deeper insights into the lives of older adults because the calls were more like chitchat than doctor-patient dialogue. Instead of asking pre-defined questions, the AI chatbot was free to converse on any health-related topic, offering social workers a better glimpse of participants’ lives. Before the introduction of CLOVA CareCall, it was the workers’ job to make regular calls to the many care recipients, which was realistically infeasible. By offloading the teleoperating task to AI, workers could now step in only in crucial situations, making it possible to implement public health interventions at scale.

“I’m not sure if I could’ve taken on the job if I had to call 26 individuals twice a week. It’d be both mentally and physically exhausting to repeat the questions over and over again. Human phone calls are also more likely to get sidetracked. We’ll ask the usual check-up questions, but the adults receiving the call will probably want to talk about other things as well. The phone call might end up taking 30 minutes, which far exceeds the given time slot.” —Teleoperator 2

– Users feel less lonely and are burdened less by face-to-face communication.

Did the users also feel the same excitement about AI calls? Most participants said they didn’t have someone to talk to in everyday life, so taking a call from the AI alleviated their loneliness. They could immerse themselves in the conversation because the LLM-driven chatbot could cover various topics beyond health.

“When I said I was sketching or reading a book by a painter, the chatbot would say something like ‘That sounds fun! I’d like to learn how to draw, too.’ This made me feel good because it really felt like I was talking to someone.” —User 5

Others cited the fact that an AI, not a human agent, was making the call as another benefit of the service. Some felt they owed something to the social workers, while others said they could open up more when getting calls from an AI.

“I know the social workers are checking up on me because I have a chronic condition and live alone. Sometimes, the calls feel perfunctory because they hang up after asking a question or two. I’d rather get AI calls.” —User 3

Challenges of leveraging an LLM-driven chatbot in public health intervention

Despite the benefits, there are still barriers to employing LLMs as a public health intervention.

– Taking full control of LLM-based chatbots is inherently impossible.

The developers of CLOVA CareCall expressed difficulties in designing the chatbot in a way so as not to generate responses outside the public health context. Our development team fine-tuned the dialogue model by training them on conversation datasets, then reduced the probability of the LLM outputting irrelevant responses using unlikelihood training. Though these machine learning methods may help steer conversations toward a desired direction, preventing them from generating improper responses altogether is impossible because LLMs are inherently a probability model. This is why developers often say they are “taming the model” when referring to the chatbot development process.

“LLMs have a strong ego, so we have to fight against them. It’s not like we can correct the inappropriate responses then and there. Even with countless trial and error, creating a chatbot that is perfectly under our control is a tough task.” —Developer 9

“With a rules-based chatbot, correcting a wrong output is as easy as modifying the scenario. With an LLM-driven chatbot, however, we must identify which patterns produce those responses and then improve the model with even more training data. So control remains elusive.” —Developer 2

– Tailoring chatbots to the needs of local governments is not easy.

Sometimes, a misunderstanding arises between developers and public health officials. The CLOVA CareCall project was introduced essentially as a chatbot service for public health intervention purposes, so local government officials believed the chatbot could be easily customized to track health metrics that interested them. For example, in areas where a significant proportion of its population was suffering from dementia, officials wanted to include questions for screening dementia. What they had in mind was a rules- and scenario-based chatbot, and they expected the CLOVA CareCall chatbot to work the same way.

“The only thing we can do is create a new conversation dataset by adding particular questions and use that to fine-tune the chatbot. This may increase the likelihood of those questions to come up during conversations, but it does not guarantee that they will all the time.” —Developer 5

The datasets used to fine-tune the chatbot were made up of everyday speech used to check up on older adults, so in municipalities where a relatively higher percentage of middle-aged people lived alone, the chatbot would sometimes say things that were out of context.

“When someone says they have a backache, the chatbot will likely agree with them and say, ‘Don’t we all.’ This response is perfectly fine for someone in their 70s, but not for someone in their 40s.” —Developer 2

Customizing the chatbot for each age group required creating yet another training dataset, which is both resource- and time-intensive. For this reason, the developers could not accommodate all the different demands.

Conclusion

We briefly discussed the benefits and challenges of using an LLM-driven chatbot for public health intervention, taking CLOVA CareCall as an example. With the success of ChatGPT, the public is now familiar with LLMs, and we expect many of the challenges we encountered to resolve over time as these models advance at unprecedented speed. Still, research on human-AI interaction must continue if we are to address their uncertainty and complexity.