Understanding the impact of long-term memory on self-disclosure with large language model-driven chatbots for public health intervention

CHI 2024 Paper

Kim Young-Ho, Research Scientist (Research Group Leader in HCI at NAVER AI Lab and NAVER Cloud)

Overview

We introduce a paper published by NAVER Cloud that was accepted at ACM CHI 2024, a leading conference in human-computer interaction (HCI) research.

Understanding the Impact of Long-Term Memory on Self-Disclosure with Large Language Model-Driven Chatbots for Public Health Intervention

ACM CHI 2024 [Read paper]

Jo Eunkyung, Research Intern at NAVER AI Lab (PhD Candidate, University of California, Irvine)

Yuin Jeong (formerly of the CareCall Development Team, NAVER Cloud, and now NAVER AI Lab)

SoHyun Park (NAVER Cloud)

Daniel A. Epstein (Assistant Professor, University of California, Irvine)

Kim Young-Ho (NAVER AI Lab, NAVER Cloud)

Introduction

LLM-driven chatbots are now commonplace and are making inroads in the IT market. A year before OpenAI released ChatGPT near the end of 2022, NAVER had already launched CLOVA CareCall powered by NAVER’s LLM, HyperCLOVA. For this groundbreaking project, we worked with local governments to provide social care to individuals living alone through AI check-up phone calls. Here’s an overall picture of how CLOVA CareCall works: First, the LLM-driven chatbot checks in on the user through twice-weekly calls. The social worker is then granted access to the call recordings and analysis so they can respond appropriately to different situations and emergencies. Such community-wide systems and policies for providing emotional support and improving physical health are called public health interventions. We recognized CLOVA CareCall as a rare and valuable example of an LLM being used for public health intervention, and this realization inspired us to explore and publish our findings on CLOVA CareCall and its implications.

For the 2023 CLOVA CareCall research paper, see our previous post:

Building on the success of last year’s paper, the CLOVA CareCall development team encouraged us to perform in-depth analysis of the conversations generated by CLOVA CareCall. In our previous research, the interviewees said the AI chatbot felt less like a human because it couldn’t remember past conversations. (The chatbot they interacted with did not have long-term memory (LTM) capabilities, which were rolled out later in September 2022.) By long-term memory, we mean the chatbot’s ability to use details from previous conversations to generate responses. Though this comes naturally to us humans, the concept has only recently been introduced to LLM-based chatbots.

How would this long-term memory feature affect the behavior of people receiving check-up calls from the AI chatbot? What are its implications for public health intervention?

For this study, we analyzed call logs generated with and without long-term memory and interviewed nine users to gather feedback on the feature.

In this post, you can read our findings on:

- Long-term memory in CLOVA CareCall

- Benefits of CLOVA CareCall’s LTM: Greater disclosure of health information

- Benefits of CLOVA CareCall’s LTM: Increased empathy and appreciation

- Challenges of CLOVA CareCall’s LTM: What should the chatbot remember, and how should it be used?

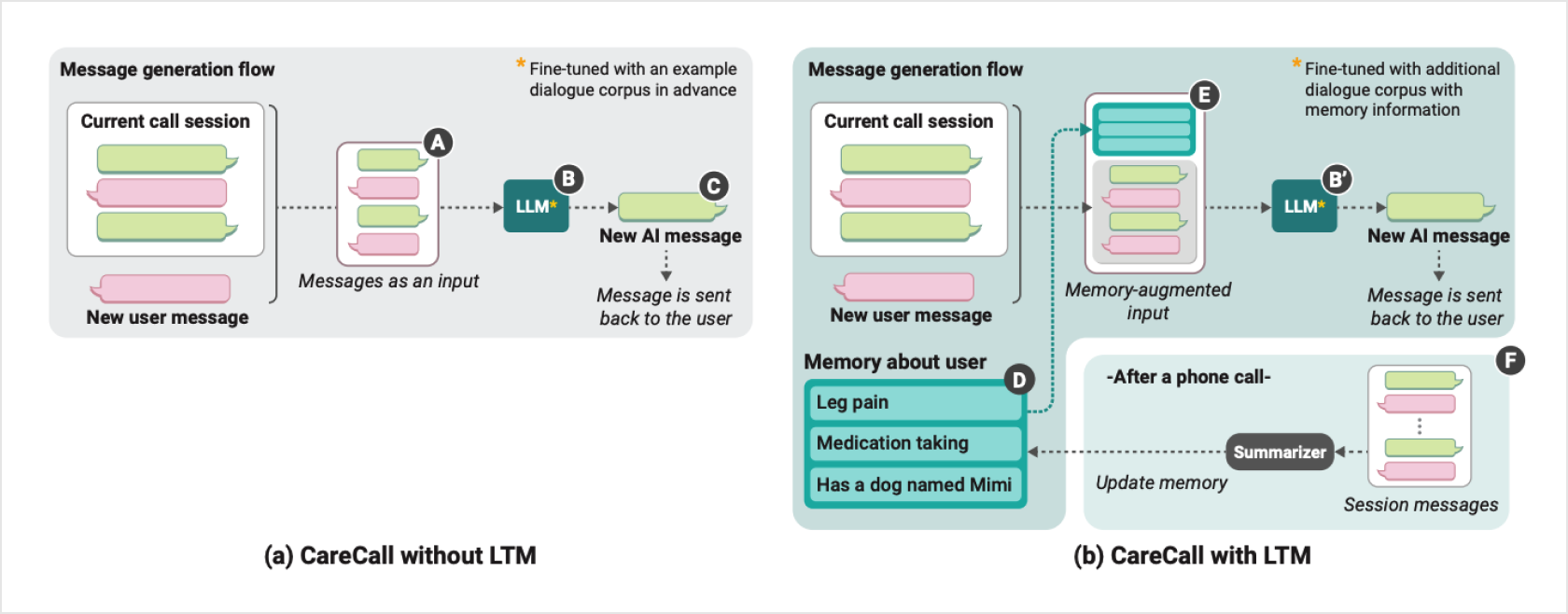

Long-term memory in CLOVA CareCall

Remembering details from your conversations for later seems simple and intuitive enough, but training chatbots to manage memories is more complicated than people think. Whereas humans make unconscious judgments using the social skills they’ve naturally acquired, AI systems must be told which bits of information to retain, to what degree, and for how long. Our CLOVA CareCall development team worked hard to make the memory feature work similarly to the human mind. The chatbot is designed to store information on five topics—health, meals, sleep, places visited, and pets—extracting and summarizing data using natural language. The memories are later added, updated, or forgotten based on further interactions. For example, if a user reports leg pain, the chatbot remembers the information until they say they’re feeling better, after which it forgets this memory.

For more details on the feature, watch the presentation by CLOVA CareCall’s development team at [NAVER Deview 2023].

Methodology: Data collection and analysis

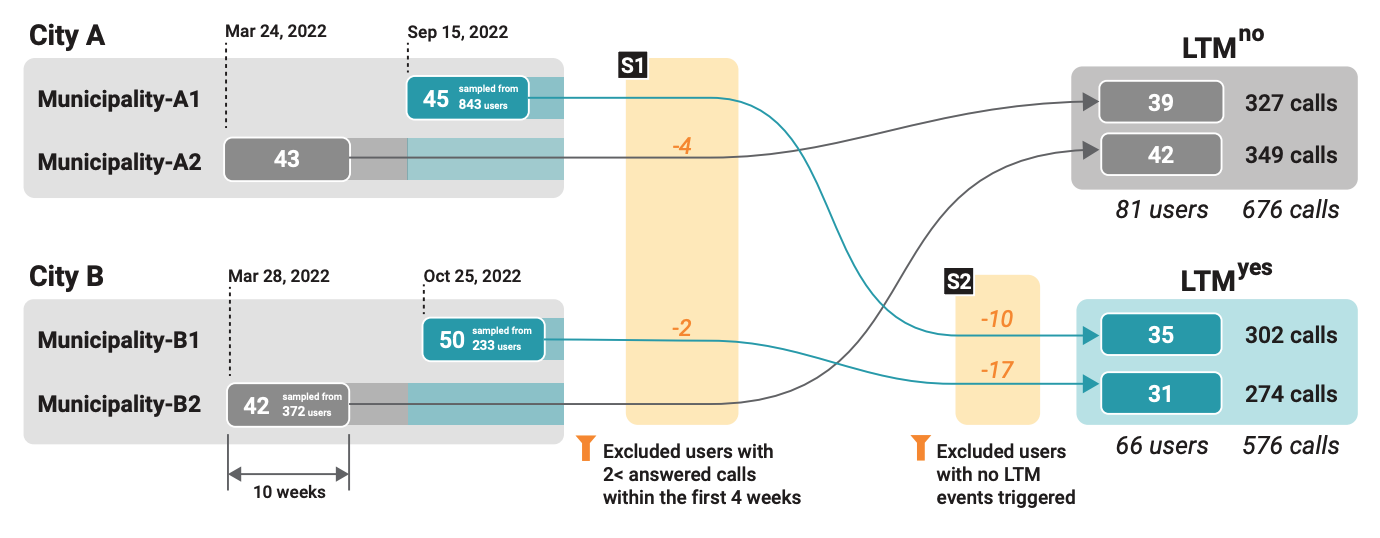

In this study, we focused on two metropolitan cities in South Korea to compare users’ experience with CLOVA CareCall with and without long-term memory. For each city, participants were divided into two groups: those who used the long-term memory feature (A1, B1) and those who didn’t (A2, B2). This way, any difference between the two groups could be attributed to the LTM feature.

Benefits of CLOVA CareCall’s LTM: Greater disclosure of health information

Over time, users who conversed with CLOVA CareCall that had long-term memory tended to share more about their health details. One participant mentioned having insomnia during the first call, and when the chatbot brought it up again in the third call, “Last time, you told me you couldn’t sleep. How are you feeling now?” the user was more forthcoming.

“It’s been tough. I’ve been taking sleeping pills for over 30 years since suffering from a traumatic injury.”

Without long-term memory, CLOVA CareCall could only repeat generic questions like “How’re you feeling today?” But as we found in our previous research, social workers can glean insights from which medicine the care recipients are on and other health details. This means the more CLOVA CareCall can draw out information from the users, the more effective it becomes as a public health intervention.

Benefits of CLOVA CareCall’s LTM: Increased empathy and appreciation

We also found that users showed significantly more appreciation towards the chatbot if it had long-term memory. Similar to how we feel when someone gets worried about us, users said in their interviews that the conversations felt more personal and sincere once CLOVA CareCall remembered what they’d said. Users also said conversing with the chatbot felt like talking to a real person when CLOVA CareCall brought up things from past conversations, especially on non-health-related topics like pets and places they’ve visited.

Challenges of CLOVA CareCall’s LTM: What should the chatbot remember, and how should it be used?

Not all feedback from our interviewees was positive. User interactions were mostly negative when the chatbot remembered and mentioned things when it was awkward to do so. For example, it is okay to ask multiple times if a user feels better after catching a cold, and CLOVA CareCall’s memory serves well in these situations. But conversations can quickly turn uncomfortable if a chatbot keeps asking after a user’s chronic illness like diabetes or cancer, a mistake that CLOVA CareCall often made. This limitation arises because the long-term memory feature is currently focused on which information to remember or forget, and not enough has been considered on how it should be used in future conversations. LLM-based chatbots have advanced significantly, but there’s still some way to go if we want to reproduce more human-like conversations.