Engineering has always been about building useful tools—and when developing new technologies, we’ve often looked to nature for inspiration. The airplane is a perfect example. We observed how birds and insects fly and learned that flight requires wings and a tail. But when it came to the actual design, we chose propulsion through an engine rather than flapping wings. We took nature’s principles, not its form, and reinterpreted them in the most effective way.

The same is true for AI. We study human intelligence, then use machines to replicate it as effectively as possible. At its core, AI is a technology that turns aspects of human intelligence into tools—from simple calculations like addition and subtraction, to more complex capabilities like memory, understanding, judgment, logic, inference, and creativity. Seen this way, AI isn’t something that appeared out of nowhere. It’s been with us for a long time.

Take the calculator. Calculators represent AI in its earliest form, turning human calculation into machine capability. By combining electrical engineering with mathematical theory, what humans once did by hand could now be done by a tool. Later, with the invention of transistors, machines gained the ability to store memory, and calculators evolved into computers. Computers then expanded into smartphones, transforming our lives with countless applications. At the time, people may have felt the same mix of anxiety and expectation that we now feel toward AI. But calculators didn’t replace human intelligence—they became tools that expanded it.

This trend continued with information search technology. In the past, finding the information you wanted meant hours in a library. Today, search engines locate what you need instantly from documents worldwide with just a few keywords. This technology helped platforms like Google and NAVER to grow into global leaders.

So why is everyone talking about AI now, when we’ve already been living with it for so long?

Let’s take a look.

From AlphaGo to Papago and beyond: The birth of LLMs

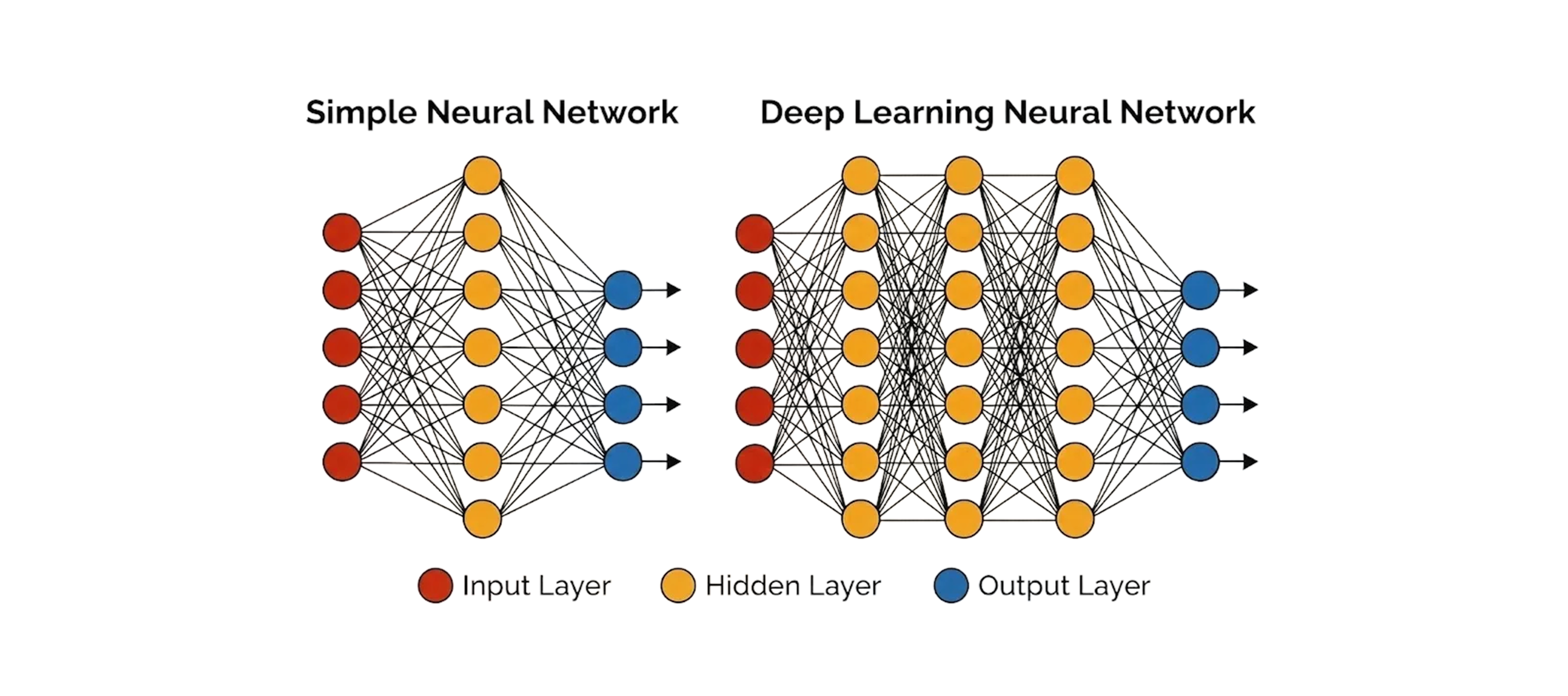

There are many ways to build AI. One of them is machine learning, a key technology that has driven AI development over the past few decades. Early methods took a statistical approach: interpret data based on human-defined characteristics, then train the model on how to weight them.

Say you want to build a model that can tell a desk from a chair. Humans had to first define the key features—number of legs, height, structure—and the model only learned how much weight to give each one. This meant models could never move beyond the limitations that people had set.

Then AlphaGo came along in 2016 and changed everything. Deep learning finds meaningful patterns on its own from raw data, rather than relying on characteristics that humans specify. Models could now pick up on things people missed, learning far more extensively and performing at a whole new level.

Deep learning requires vast amounts of data and computing power, but the results are far better than older techniques. AlphaGo’s victory over a world champion wasn’t just a surprise—it was a signal that AI had entered a new era.

From machine translation to LLMs: How a language model expands into intelligence

The deep learning revolution brought decisive change to natural language processing (NLP). Until the early 2010s, most machine translation relied on statistics-based models, which often produced awkward or out-of-context translations because they could only process sentences in fragments. This limited their quality for everyday use.

But things changed dramatically around 2013, when deep learning was applied to machine translation research. Models could now grasp entire sentence structures, meanings, and relationships between words—making it possible to generate natural-sounding translations that statistics-based models couldn’t achieve.

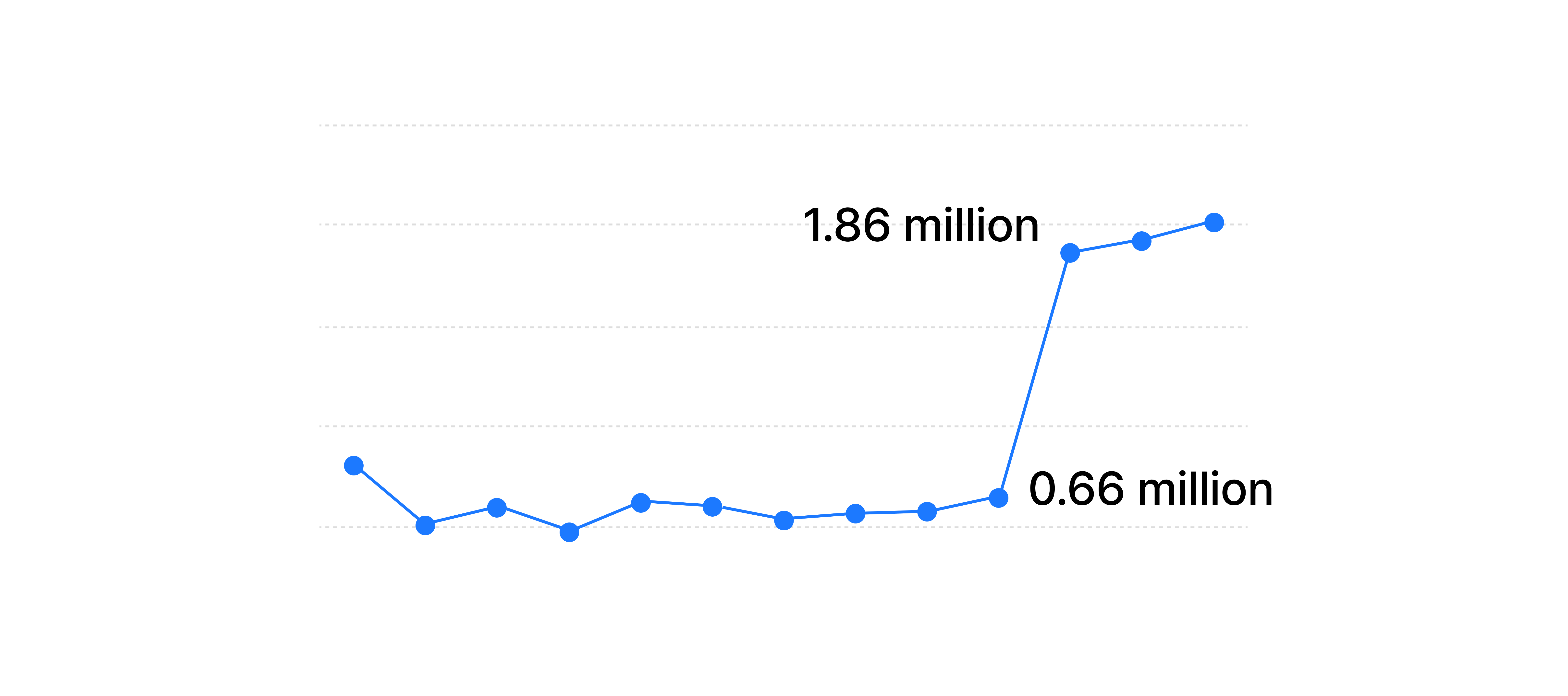

From there, many organizations began working to commercialize deep learning-based translators. After about three years of development, NAVER became the first to launch a deep learning-based translator for public use—ahead of Google. The engine powered Papago, which went on to become a leading translation service with 20 million monthly active users, recognized for its processing speed, natural output, and reliable quality.

The success of deep learning-based translators marked more than technological progress—it was the first real demonstration that AI could understand and process human language. This experience set the stage for broader advances in NLP, and eventually, for building LLMs.

Weekly translation volume tripled after NMT adoption

What’s ahead for AI services

If we trace the path from calculators to computers to smartphones, we can start to imagine where LLMs might be headed. Calculators began with a single function, then combined with keyboards, monitors, GUIs, and operating systems to become an entirely new kind of platform. LLMs are likely to follow a similar trajectory—merging with new interfaces and sensory inputs to evolve into something far more powerful.

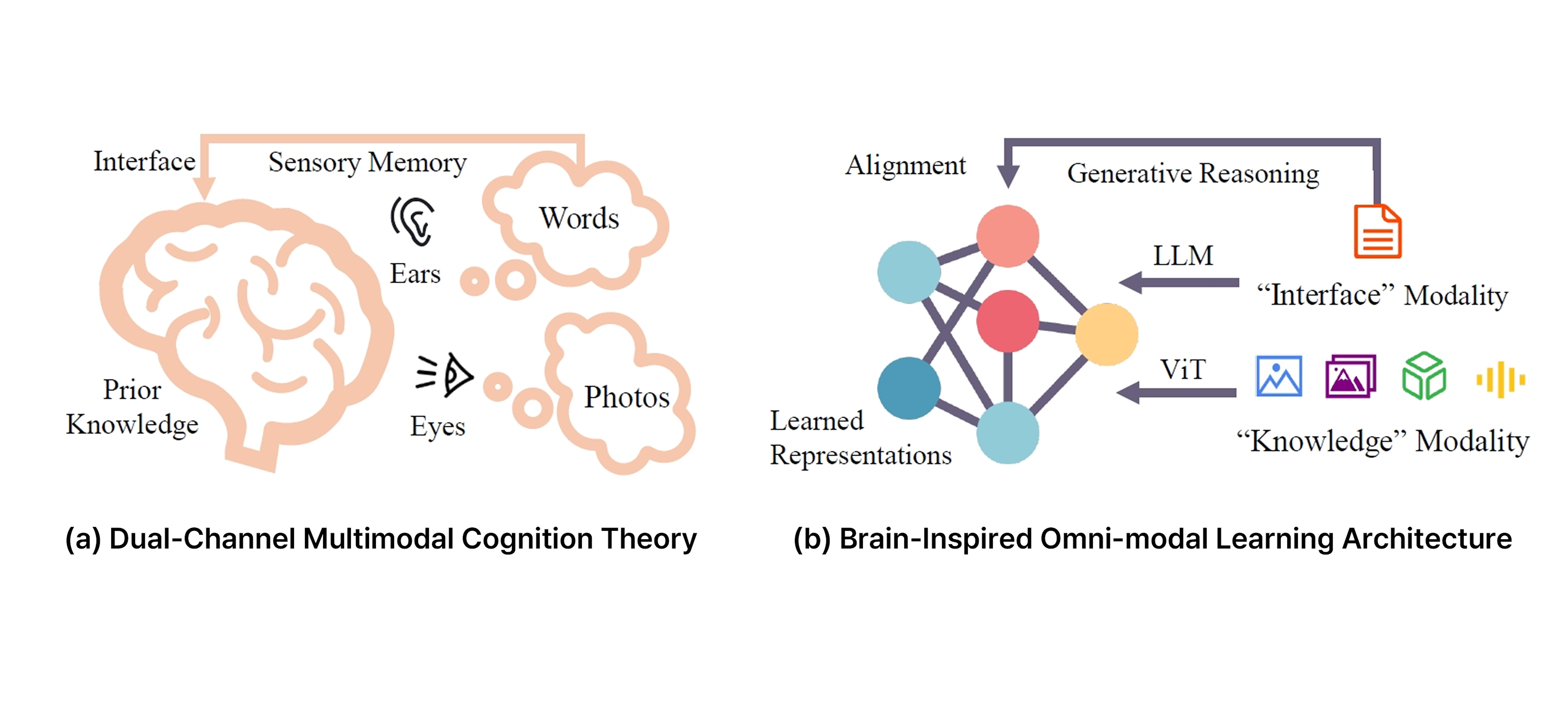

Today, AI is branching out in many directions: text-based LLMs, voice recognition, speech synthesis, image recognition, video analysis, and other cognitive technologies. Each mimics some aspect of human perception and is already being applied across a wide range of services. But so far, these technologies have mostly been developed separately, with different models for different domains, only connected when needed.

Omni-modal models: The next step toward true intelligence

AI is now moving in a new direction: training on multiple modalities at once. Known as omni-modal models, this approach is more than simply merging different features—it represents a step toward intelligence that can integrate and understand the relationships across different types of data.

Just as deep learning achieved rapid breakthroughs by independently learning relationships between features, omni-modal models train on text, audio, images, video, and sensor data simultaneously, reaching a level of understanding that traditional models could never achieve.

This approach unlocks capabilities that text alone could never provide. For example:

- Detecting changes in a person’s voice to infer whether they have a cold, how they’re feeling, or whether they’ve been exercising

- Analyzing ambient sound and visual information together to understand situations the user may not be aware of

- Simulating a user’s imagination and quickly generating results close to reality

From this, a new form of AI is emerging—one that connects understanding, inference, and cognition in a more integrated way.

In some ways, this mirrors how human intelligence works: our senses, judgment, emotions, and reasoning all interact and influence one another. And as these models gain real-world interfaces, the potential for innovation grows.

What it takes to turn AI into a service

A good model alone doesn’t make a good service. It took us three years to turn a deep learning-based machine translation model into the actual Papago service. And from the release of GPT-1 in 2018 to ChatGPT in 2022, those four years weren’t just spent on model research—they were spent turning a model into a fully serviceable product.

Several important factors come into play when creating AI services:

- Usability

One of the biggest challenges we faced while building Papago was that it was difficult to input foreign languages on a small smartphone screen. Because input was inconvenient, the translation experience couldn’t be satisfying. That’s why we developed a feature to recognize and translate text in images. We built proprietary technology that could understand image paragraphs and transform them into semantic units. To this day, this approach is recognized as more accurate and practical than LLM-based translation for certain use cases. - Safety and reliability

AI is susceptible to absorbing bias from training data. For instance, translations involving certain countries, religions, or political issues may come out distorted. Or, as with early translators that referred to Trump as a businessman rather than president, a lack of data can lead to misunderstandings. Hallucinations and information omission remain important challenges in LLMs today. - Infrastructure and cost efficiency

Running a large-scale model requires high-performance GPUs, fast response times, a reliable service environment, and cost efficiency. These aren’t about building better models—they’re about running services in a sustainable way.

To sum up, AI services can only earn users’ trust and loyalty when they deliver on performance, usability, safety, infrastructure, and cost efficiency.

Conclusion

The AI race may seem dominated by the U.S. and China, but Korea has built core capabilities in information search, voice, machine translation, and image processing that are just as strong. HyperCLOVA X is our proprietary LLM built on this foundation.

As AI advances, the competitive edge will shift from who made the best model to who turned it into an outstanding service. When omni-modal models surpass LLMs and become mainstream, AI will reshape user experience in ways we can’t yet imagine. At the center of this shift is user value—and services of the next AI era will be built on that foundation.

Learn more in KBS N Series, AI Topia, episode 4

You can see all of this in action in the fourth episode of KBS N Series’ AI Topia, where Shin Joonghwi, Head of AI applications at NAVER, breaks down these ideas with clear examples and helpful context. It’s a great way to get a fuller picture of what we’ve covered here!