Today we’re introducing HyperCLOVA X SEED Think, our new model with real Thinking power. This is the first open-source HyperCLOVA X model with reasoning capabilities, and it represents a major leap forward from the earlier HyperCLOVA X SEED model.

We’ve focused on developing three core AI agent capabilities:

- Breaking down complex problems into small units

- Choosing the right tools and functions for each situation

- Reflecting on and correcting its own mistakes

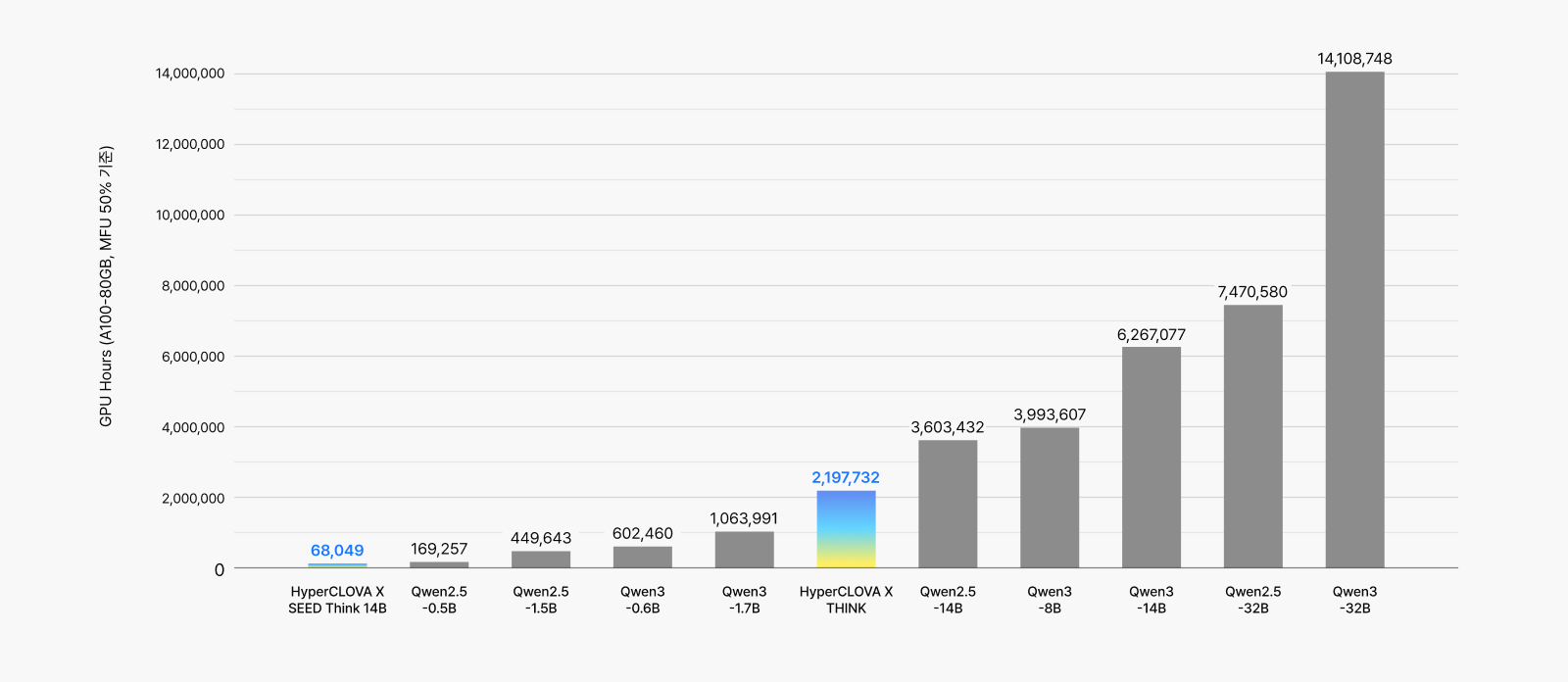

For developers and researchers working in AI, HyperCLOVA X SEED Think opens up new possibilities for innovation. Most remarkably, we achieved this performance while using just 1% of the training costs of global competitor models, yet it delivers consistently outstanding results.

Just how powerful is 14 billion parameters?

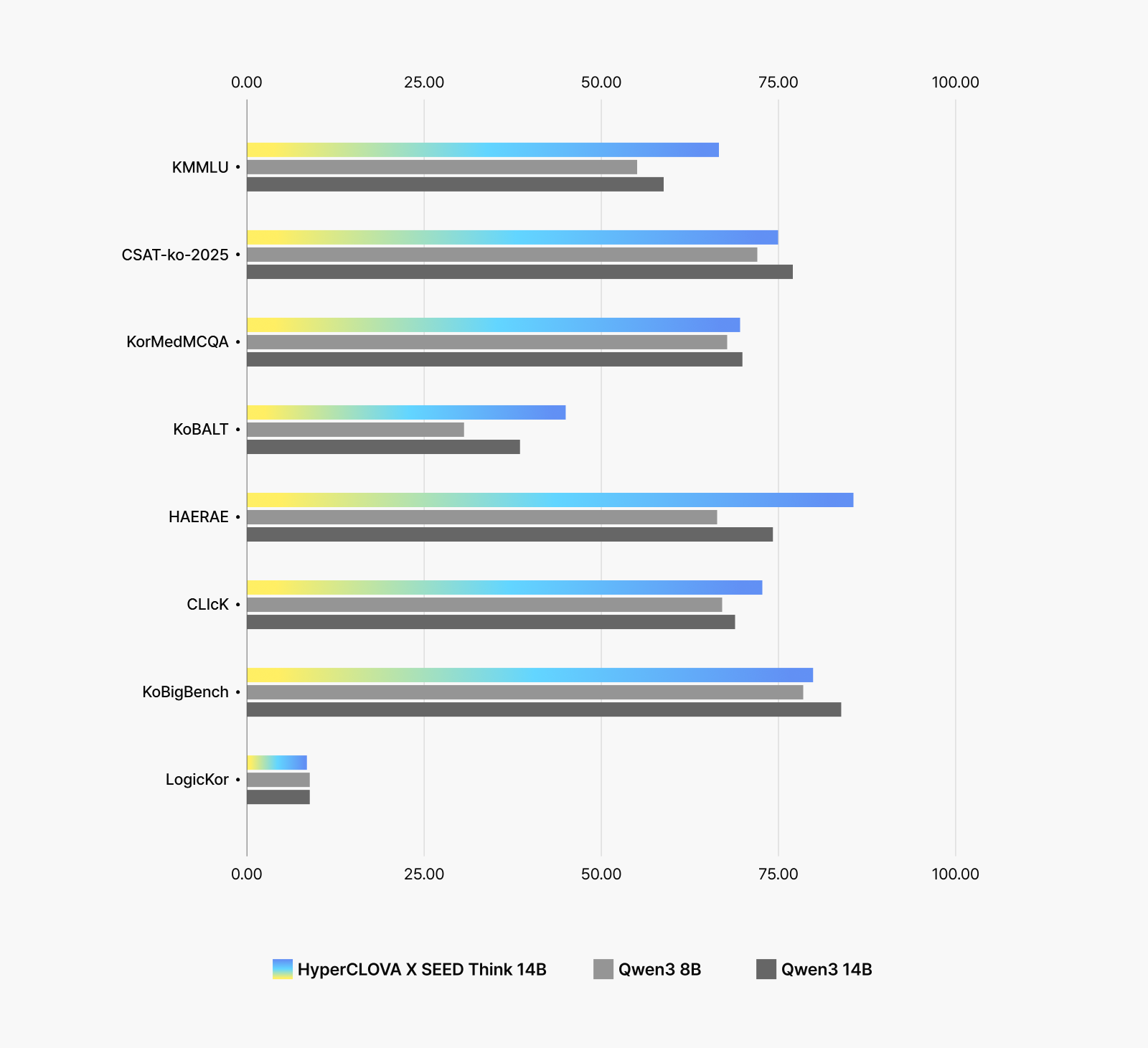

HyperCLOVA X SEED Think is a mid-sized model with 14 billion parameters. Compared to QWEN3-14B, a global model of similar scale, it shows clear superiority in Korean language proficiency and cultural understanding, while also demonstrating strong competitiveness in other areas closely related to AI agent capabilities

When you look at individual subjects, the benchmarks highlight this strength clearly. On KoBALT, which emphasizes grammatical and semantic understanding, and HAE-RAE-Bench, which evaluates Korean history and general knowledge, HyperCLOVA X SEED Think reliably delivers higher performance compared to the other models.

Size isn’t everything: How we built an intelligent compact model

Rather than simply scaling up model size, HyperCLOVA X SEED Think focuses on maximizing performance within a compact architecture. Two core technologies drive this approach:

- Pruning and knowledge distillation

- Enhanced reasoning through reinforcement learning

These technologies were the keys to delivering high-performance LLM capabilities at significantly reduced costs.

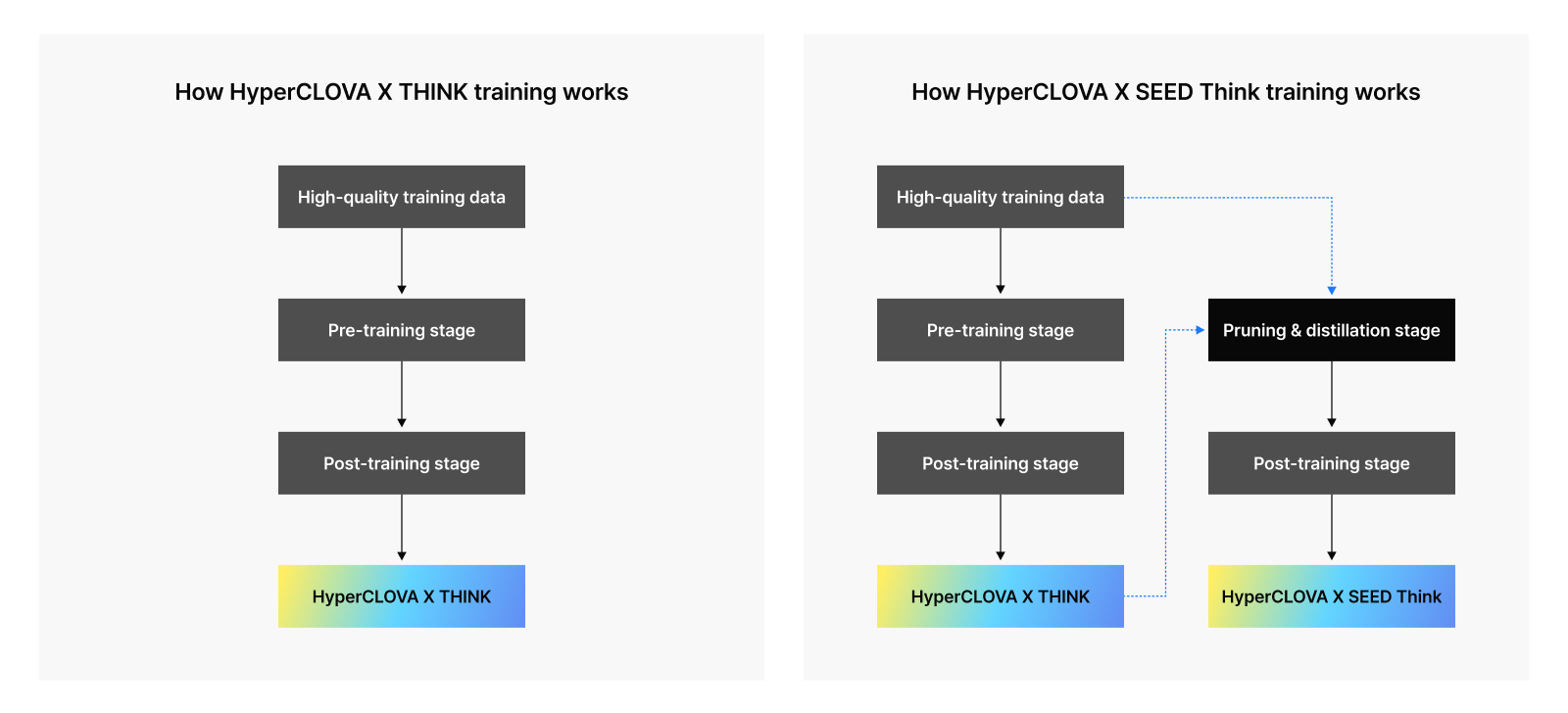

Pruning and distillation: Cutting out while passing on

Pruning and knowledge distillation are core techniques for creating lighter, more efficient models.

- Pruning removes unnecessary parameters from a trained model, reducing memory usage and computational resources.

- Knowledge distillation recovers any performance lost during pruning by transferring knowledge from large “teacher” models to smaller “student” models.

By strategically removing less important components while effectively transferring critical knowledge, we maintained high performance in a much more compact, efficient model.

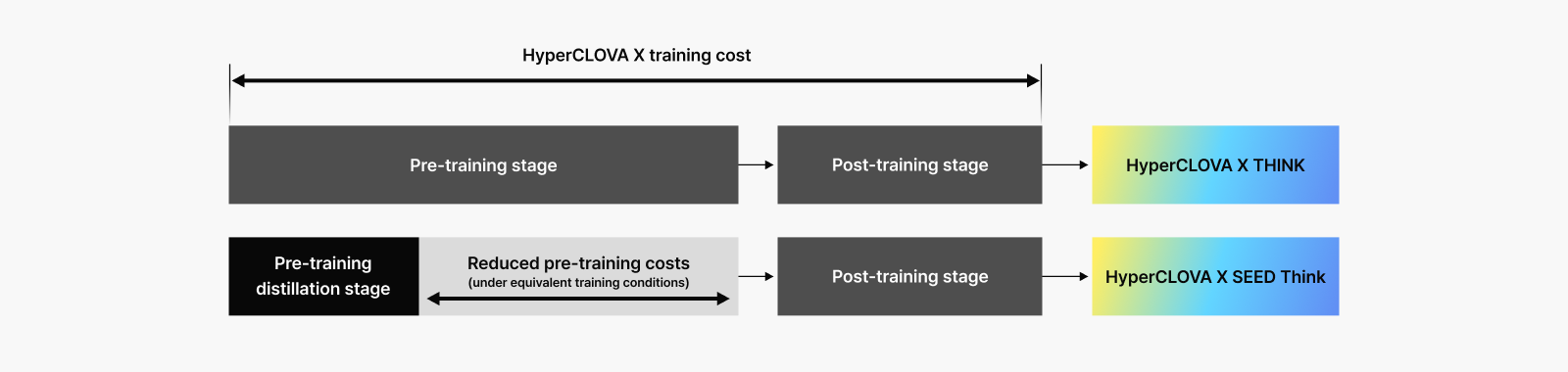

How effective was our approach? The graph below shows that HyperCLOVA X SEED Think achieved comparable performance at dramatically lower training costs than other models in its class.

There’s a foundational reason why these two technologies worked so effectively with HyperCLOVA X SEED Think.

First, we already had a high-performance flagship model to serve as our teacher. Second, our training methodology has been refined through extensive experimentation and optimization. These foundations enabled us to build a model that competes with similarly sized global models by learning from our larger, more capable teacher model while using just 1% of the typical training cost.

To learn more, read our post “Small but mighty: Making HyperCLOVA X both lightweight and efficient.”

How do you teach AI to really “Think”?

Simply inputting information into a lightweight model doesn’t give it reasoning capabilities. HyperCLOVA X SEED Think learned “how to Think” through a reinforcement learning-based training process with four key stages:

- Supervised fine-tuning (SFT)

- Reinforcement learning with verifiable reward (RLVR)

- Length controllability (LC)

- Combined RLHF and RLVR training

First, in supervised fine-tuning, we focused on specific domains like math and coding, teaching the model to imitate each step of reasoning for highly challenging problems. This laid the groundwork for its reasoning capabilities.

Next, in reinforcement learning with verifiable rewards, we filtered out problems that were too easy or difficult in order to maximize training efficiency. The model received rewards for correct answers, proper formatting, and appropriate language, allowing it to learn from clear feedback.

In the third stage, we added length control, rewarding the model for staying within specified response limits so it could learn to adjust its output length automatically.

Finally, we combined human feedback with verifiable rewards for reinforcement learning to improve both answer quality and logical reasoning simultaneously.

Adding advanced reasoning capabilities to a pruned and distilled model is very challenging. Typically, when you reduce training costs or model size, reasoning capabilities decline proportionally. However, through years of research and experimentation, the HyperCLOVA X team successfully created a model with reasoning capabilities that rivals much larger competitors.

Real-world examples

As an AI agent, HyperCLOVA X SEED Think excels at three core capabilities: solving complex problems step-by-step, picking the best tools and functions for each task, and self-correcting when it makes errors. Let’s see these capabilities in action through specific examples.

[Example 1: Solving a college entrance math exam question (Korean CSAT)]

The model formulates hypotheses and verifies them based on the given conditions.

[Example 2: Writing a simple piece of code]

The model decomposes the task into smaller subtasks, executes them step by step, explains the logic behind the output, and even suggests ways to improve the original request.

[Example 3: Planning a global animated series]

The model breaks down complex requirements and diverse settings to generate a detailed proposal.

You can download HyperCLOVA X SEED Think HERE.

With this release, we hope more developers and researchers will explore the possibilities of “Thinking AI.” We’ll keep evolving HyperCLOVA X into small but powerful models, building an AI ecosystem that’s open to everyone.